Generative AI and Foundation Models for Human Sensing Workshop

GenAI4HS @ UbiComp 2025

Where

U5 147, Undergraduate Centre, Otakaari 1

Aalto University, Espoo, Finland

When

09:00 - 13:00

12 October 2025

Call for Participation

Important Details

Submission Deadline

Acceptance Notification

Camera-ready Deadline

Submission Platform

→ Society: SIGCHI

→ Conference: UbiComp/ISWC 2025

→ Track: UbiComp/ISWC 2025 GenAI4HS

Formatting

UbiComp / ISWC 2025 Publication Templates

Paper Length

Themes and Goals

Human sensing plays a critical role in wide ranging applications such as human activity recognition, health monitoring and behavior analysis, often driven by the development of specialized models. Meanwhile, broadly usable, generic models, particularly involving Generative AI and foundational models, have brought transformative advancements in other domains. Yet, similar breakthroughs have not yet been widely observed in wearable and ubiquitous computing.

This workshop aims to bring researchers and practitioners to discuss the recent trends and challenges in building foundational models for human sensing, and integrating advances from Generative AI into sensor pipelines. The workshop will present an opportunity for researchers to present their latest findings, acquire hands-on experience, and discuss the future of the field. It will also contain a mini-tutorial and mini-hackathon on using Generative AI and foundational models for human sensing applications and host presentations for the accepted submissions, which will take the form of full and position papers. We particularly welcome preliminary or work-in-progress results, in cover topics such as, but not limited to:

- Foundational models for wearable and ubiquitous computing.

- Augmenting context descriptions with generative models.

- Multi-modal domain adaptation or representation Learning for wearable and ubiquitous computing.

- Using generative models for data simulation, e.g., text-to-motion, diffusion models, and others. This also includes physical simulation and addressing the critical "sim2real" gap.

- Large Language Model (LLM) integration for ubiquitous computing.

- Challenges in practical deployment of Generative AI and foundational models in-the-wild.

Papers submitted for review should be 4 - 6 pages in length (excluding references). Submissions will go through a single-phase review process with at least 2 reviewers. They will be reviewed and selected based on their originality, relevance, technical correctness, and their potential for initiating fruitful discussions at the workshop. Note, that position papers are not expected to present finished research projects. We rather ask for thought-provoking ideas or initial explorations of a topic. At the workshop, accepted submissions will be presented in a 10-minute presentation. At least one author of an accepted submission must attend the workshop. All accepted workshop papers can be included in the ACM Digital Library (DL) and the supplemental proceedings.

Program

The workshop will be a half-day workshop (09:00 - 13:00) taking place on Sunday, 12 October 2025.

Welcome and Introductions

An introduction given by the organizers with a summary of the workshop's themes, goals, and outline.

Paper Presentations - Session 1

A set of 15-minute paper presentations.

Coffee Break

A coffee break and poster session organized by UbiComp / ISWC 2025.

Paper Presentations - Session 2

A set of 15-minute paper presentations.

Activity

A hands-on activity on the exploration of LLMs for HAR.

Final Remarks

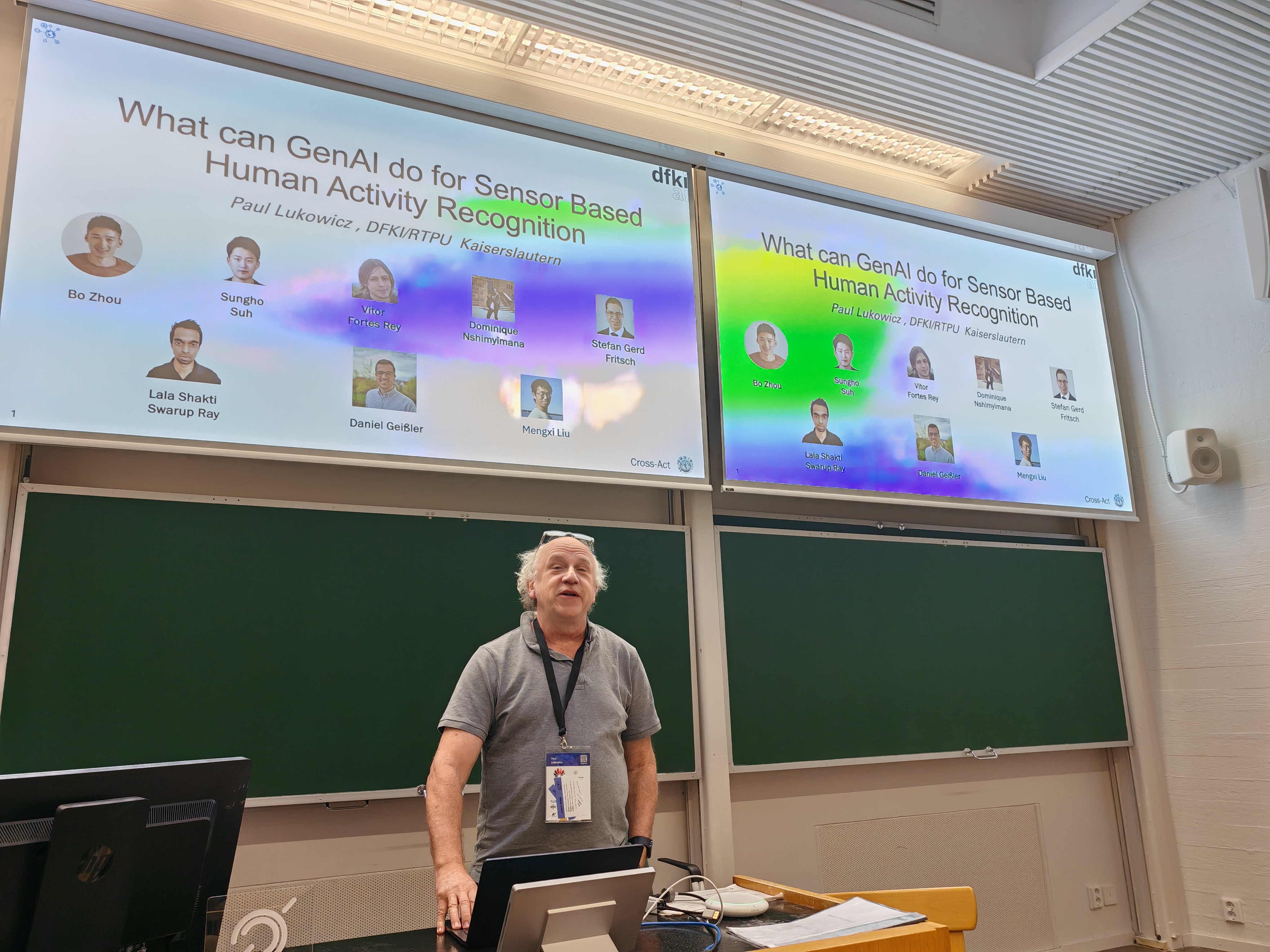

Speaker

Paul Lukowicz

DFKI and RPTUAccepted Papers

LLaSA: A Sensor-Aware LLM for Natural Language Reasoning of Human Activity from IMU Data

Sheikh Asif Imran Shouborno (Worcester Polytechnic Institute), Mohammad Nur Hossain Khan (Worcester Polytechnic Institute), Subrata Biswas (Worcester Polytechnic Institute), Bashima Islam (Worcester Polytechnic Institute)

🏆 Best Paper Award

Exploring Generalist Foundation Models for Time Series of Electrodermal Activity Data

Leonardo Alchieri (Università della Svizzera Italiana), Lino Candian (Università della Svizzera Italiana), Nouran Abdalazim (Università della Svizzera Italiana), Lidia Alecci (Università della Svizzera Italiana), Dr Giovanni De Felice (Università della Svizzera Italiana), Silvia Santini (Università della Svizzera Italiana)

Revisiting Multi-Agent GAN for Multimodal Time Series Generation in Human Sensing and mHealth Applications

Flavio Di Martino (IIT-CNR), Shankho Subhra Pal (IIT-CNR), Franca Delmastro (IIT-CNR)

A Multi-Agent LLM Network for Suggesting and Correcting Human Activity and Posture Annotations

Ha Le (Northeastern University), Akshat Choube (Northeastern University), Vedant Das Swain (New York University), Varun Mishra (Northeastern University), Stephen Intille (Northeastern University)

Thou Shalt Not Prompt: Zero-Shot Human Activity Recognition in Smart Homes via Language Modeling of Sensor Data & Activities

Sourish Gunesh Dhekane (Georgia Institute of Technology), Thomas Ploetz (Georgia Institute of Technology)

Organizers

Harish Haresamudram

Georgia Institute of Technology

Chi Ian Tang

University of Cambridge

Megha Thukral

Georgia Institute of Technology

Vitor Fortes Rey

DFKI and RPTU

Sungho Suh

Korea University

Bo Zhou

DFKI and RPTU

Paul Lukowicz

DFKI and RPTU

Thomas Plötz

Georgia Institute of TechnologyDownloads/Resources

CSV File for Exploration https://tinyurl.com/data-har

Exploration Prompts: Google Doc

Registration and Attendance

Participants can register via the UbiComp 2025 Conference Registration (Open now!)

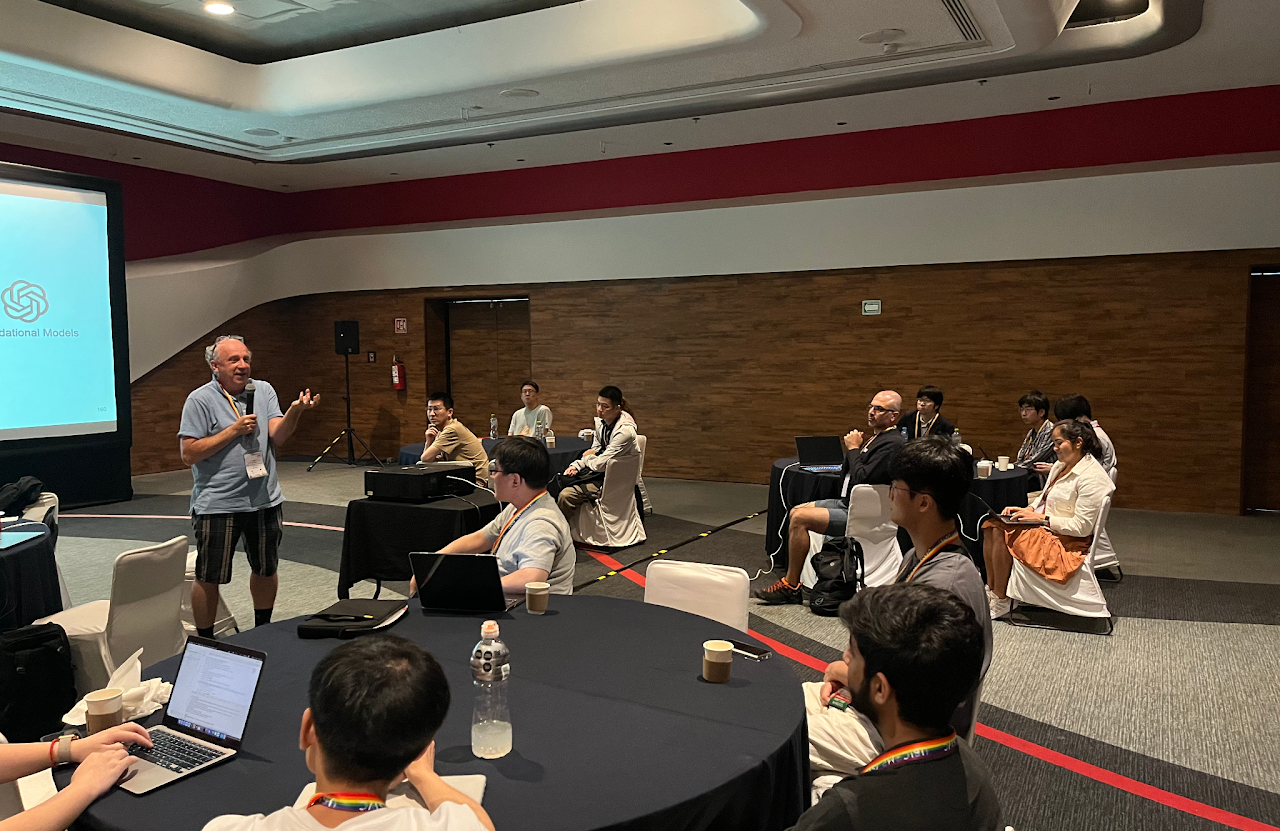

Past Photos

This workshop builds upon the successful Ubicomp Tutorials On Solving The Activity Recognition Problem (SOAR).

SOAR @ Ubicomp 2024

SOAR @ Ubicomp 2023

Contact

Email Us

hharesamudram3 at gatech.edu

thomas.ploetz at gatech.edu

cit27 at cl.cam.ac.uk